Picture courtsey: Shutterstock images

Picture courtsey: Shutterstock images

How does a machine sees a text?

The relation of raw data with numbers may not be as intuitive as it seems. It is very easy to think in terms of images where each pixel can be treated as intensity or an audio clip where frequency and intensity vs time relation can be used but what about the text in a document? How do you replace words in a document with their representations? How do you ensure relation between in them or how do you reflect the dependency in the representation of the successive word with the previous word?

To answer all these questions we need to understand the concept of Word Embeddings. Word embeddings is a technique to represent a word in meaningful vectors which in some latent space capture the features of the word.

For eg) Suppose we get the word embeddings of words, "man", "boy" and "car" as unit vectors {w_1}, {w_2}, {w_3} respectively. We would expect that "man" and "boy" would be closer to each other in the latent space compared to "car" as it is less related to these two. Hence, {w_1^T}.{w_2} > {w_1^T}.{w_3} and {w_1^T}.{w_2} > {w_2^T}.{w_3} . Try it out yourself at this link.

Capturing features like this is the essence of word embeddings and hence, often serve as feature extractor in many deep learning models. More importantly, word embeddings can be obtained in both contextual and non-contextual setting. What to do I mean by that?

#1. Non-Contextual Word Embeddings

When word embeddings are pinned to a certain vector representation no matter where the word appears they are said to be non-contextualised. To understand this let's take an example, "George Washington lives in Washington". Now, while modelling such a sentence, both the "Washington" will get the same word embedding. It is independent of the context (topic/ type) of the word in the sentence. It might seem like non-contextual word embeddings don't offer much due to their obvious drawback but in reality, they serve as the stepping stone for developing any contextual word embedding which we will see next. Popular non-contextual word embeddings include word2vec, GloVe, fastText.

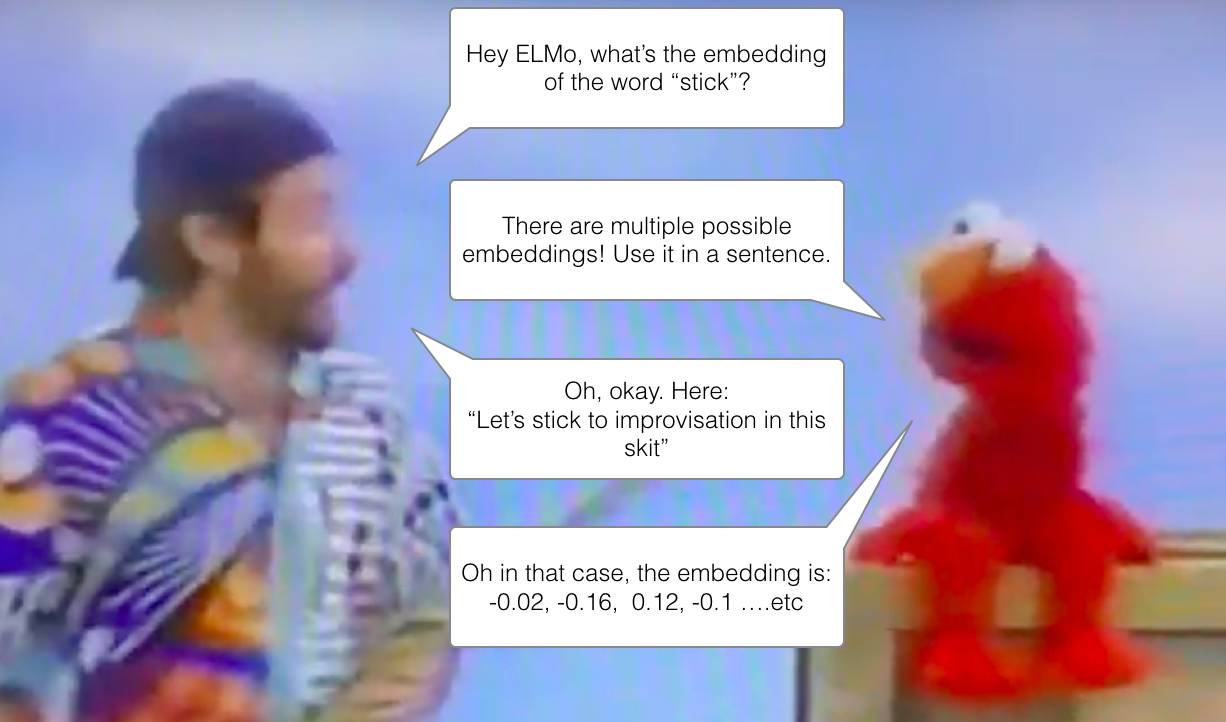

#2. Contextual Word Embeddings

Contextual word embeddings depend on the sentence, position and type of the word and hence, are generated on the fly. So, in the previous example, "George Washington(1) lives in Washington(2)", this time both the "Washington" will have different word embeddings. These capture the true essence of the words and some word embedding systems like BERT are too powerful that they have single-handedly revised majority of the NLP task SOTA models. Recent systems like knowBERT and E-BERT have even combined Knowledge Graph entity relations in their models further enriching the word embeddings. Popular contextual word embeddings include ELMo, BERT.

Picture courtsey: Illustrated-BERT

Picture courtsey: Illustrated-BERT

Though contextual word embeddings seem all-powerful, sometimes it is simply not possible to give a concrete word embedding for some ambiguous sentences like, "Time flies like an arrow" or "Fruit flies like a banana".

Stay Well, Stay Safe!